Inspired from Paul Shan

Before starting the article I would like to state that this is not about the basic of JavaScript array. Neither about teaching syntaxes or showing usage examples. The article is more about memory representation, optimization, behavior differences over syntaxes, performance and the recent evolution.

When I started using JavaScript for the first time; I was already familiar with C, C++, C# etc. And trust me like many other C/C++ people, I also didn’t have a good first date with JavaScript.

One of the major reasons why I didn’t like JavaScript was, its Array. As JavaScript arrays are implemented as hash-maps or dictionaries and not contiguous, I was feeling like this is a B grade language; can’t even implement an array properly. But since then both JavaScript and my understanding with JavaScript has changed… a lot.

Why JavaScript arrays are not actual arrays

Before stating something about JavaScript, let me tell you what is an Array.

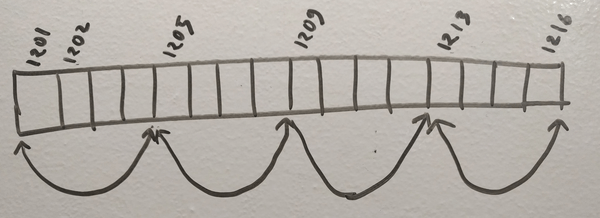

So, arrays are a bunch of continuous memory location, used to hold some values. Here the emphasis is on the word continuous or contiguous; because this has a significant effect.

An memory representation of an array has been provided in the picture above. So it is made to hold 4 elements of 4 bits each. Thus it is taking 16 bits memory blocks, all in the same order.

Suppose, I’ve declared tinyInt arr[4]; and it has captured a series of memory blocks, starting from address 1201. Now at some point if I try to read a[2], what it will do is a simple mathematical calculation to find out the address of a[2]. Something like 1201 + (2 X 4) and will directly read from address 1209.

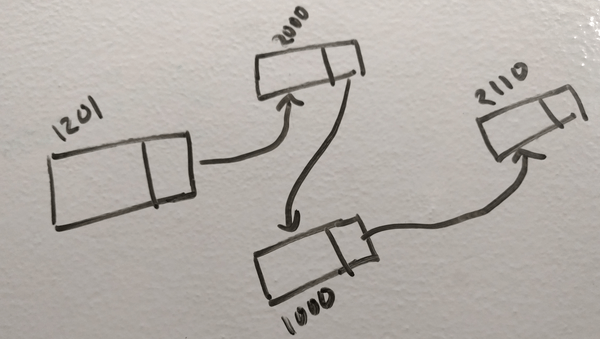

In JavaScript, an array is a hash-map. It can be implemented using various data structures, one of them is linked-list. So in JavaScript if you declare an array var arr = new Array(4); it will make a structure like the picture above. Thus, if you want to read from a[2] any any point in your program; it has to traverse starting from 1201 to find the address of a[2].

So this is how JavaScript arrays are different from actual arrays. Obviously a mathematical calculation will take way lesser time than an linked-list traversal. For long arrays, life will be more tough.

Evolution of JavaScript arrays

Remember the days when we used to feel jealous if a friend gets 256MB RAM in his computer? But today, 8GB RAM is a common thing.

Just like this, JavaScript has a language also have evolved a lot. With the immense effort from V8, SpiderMonkey, TC39 and the growing number of web users, JavaScript has become a necessity for the world. And performance improvement is an obvious need if you have a huge user base.

JavaScript engines these days actually allocate contiguous memory for its arrays; if the array if homogeneous (all elements of same type). Good programmers always keep their array homogeneous and JIT (just in time compiler) taking the advantage of that does all its array reading calculation just like the way C compiler does.

But, the moment you want to insert an element of different type on that homogeneous array, JIT de-structure the entire array and recreate with the old-days style.

So, if you are not writing bad codes, JavaScript Array objects maintains an actual array behind the scene, which is really great for the modern JS developers.

Not only that, the arrays have evolved even more with ES2015 or ES6. TC39 decided to include typed array in JavaScript and thus; we have ArrayBuffer with us today.

ArrayBuffer gives you a chunk of contiguous memory chunk and let you do whatever you want with it. However, dealing directly with memory is very low level and more complicated. So we have Views to deal with ArrayBuffer. There are already some views available and more can be added in future.

var buffer = new ArrayBuffer(8);

var view = new Int32Array(buffer);

view[0] = 100;

If you want to know more about the usage of Typed Arrays in JavaScript, you can go through the MDN Documentation.

Typed arrays are performant and efficient. It was introduced after the request from WebGL people, as they were facing immense performance issues without a way to handle binary data efficiently. You can also share the memory using SharedArrayBuffer across multiple web-workers to get a performance boost.

Amazing right? It started from simple hash-maps and now we are dealing with SharedArrayBuffer.

Old Array vs Typed Array – performance

We’ve talked a lot on array evolution in JavaScript. Now let’s check how beneficial are the modern arrays. I have done some small tests here and all are done with Node.js 8.4.0 on Mac.

Old Array – insertion

var LIMIT = 10000000;

var arr = new Array(LIMIT);

console.time("Array insertion time");

for (var i = 0; i < LIMIT; i++) {

arr[i] = i;

}

console.timeEnd("Array insertion time");

Time taken: 55ms

Typed Array – insertion

var LIMIT = 10000000;

var buffer = new ArrayBuffer(LIMIT * 4);

var arr = new Int32Array(buffer);

console.time("ArrayBuffer insertion time");

for (var i = 0; i < LIMIT; i++) {

arr[i] = i;

}

console.timeEnd("ArrayBuffer insertion time");

Time taken: 52ms

Damn, what am I seeing? The performance of old traditional array and ArrayBuffer are same? Nope. Remember, I told before that the compilers these days are smart and converts the traditional array to an actual contiguous memory array internally, if it’s homogeneous. That’s exactly what happened here in the first example. Though I used new Array(LIMIT), then also it was maintaining a modern array in it.

Now let’s modify the first example and make it a heterogeneous array and let’s see if there’s any performance difference.

Old Array – insertion (heterogeneous)

var LIMIT = 10000000;

var arr = new Array(LIMIT);

arr.push({a: 22});

console.time("Array insertion time");

for (var i = 0; i < LIMIT; i++) {

arr[i] = i;

}

console.timeEnd("Array insertion time");

Time taken: 1207ms

All I have done here is, added a new expression in line no. 3 to make the array heterogeneous. Everything else is exactly same as before. But see the performance difference. It’s 22 times slower.

Old Array – read

var LIMIT = 10000000;

var arr = new Array(LIMIT);

arr.push({a: 22});

for (var i = 0; i < LIMIT; i++) {

arr[i] = i;

}

var p;

console.time("Array read time");

for (var i = 0; i < LIMIT; i++) {

//arr[i] = i;

p = arr[i];

}

console.timeEnd("Array read time");

Time taken: 196ms

Typed Array – read

var LIMIT = 10000000;

var buffer = new ArrayBuffer(LIMIT * 4);

var arr = new Int32Array(buffer);

console.time("ArrayBuffer insertion time");

for (var i = 0; i < LIMIT; i++) {

arr[i] = i;

}

console.time("ArrayBuffer read time");

for (var i = 0; i < LIMIT; i++) {

var p = arr[i];

}

console.timeEnd("ArrayBuffer read time");

Time taken: 27ms

Conclusion

Introduction of typed array in JavaScript is a great step. Int8Array, Uint8Array, Uint8ClampedArray, Int16Array, Uint16Array, Int32Array, Uint32Array, Float32Array, Float64Array etc are typed array views, who are in native byte order. However, you can also check DataView to create your custom view window. Hope in future we will find more DataView libraries to use ArrayBuffer with ease.

It’s nice to see arrays have been improved in JavaScript. Now they are fast, efficient, robust, and smart enough while allocating memory.

Sorry, the comment form is closed at this time.